Real-Time Foveated Rendering in

Virutal Reality for Ray-Tracing

Andreas Polychronakis

Type

Thesis

Supervisor

Katerina Mania

Collaborators

George Alex Koulieris

Research Domain

• Eye-Tracking

• Foveated Rendering

• Real-Time Graphics

Abstract

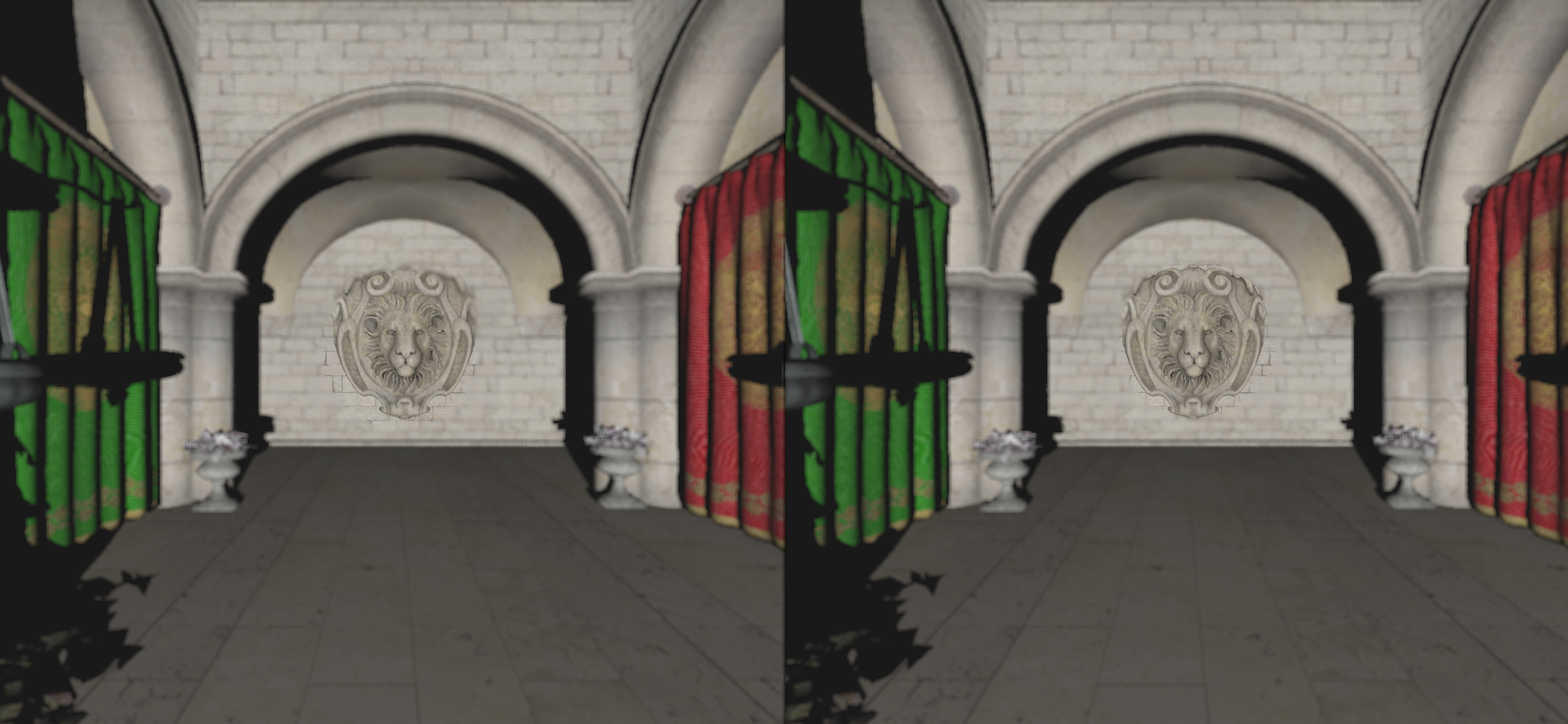

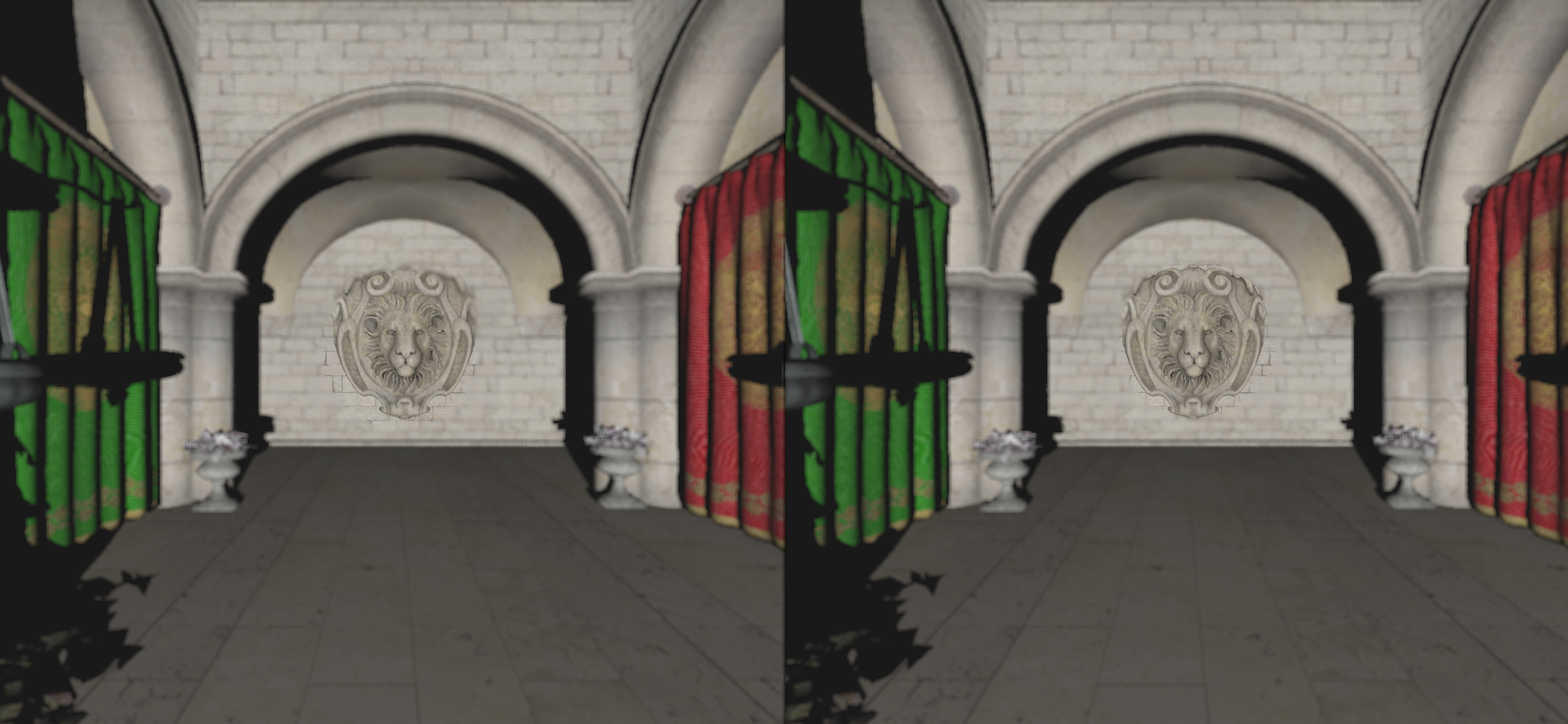

Τhe goal of this thesis is the development of software supporting 3D photorealistic graphics for Virtual or Augmented Reality environments. Foveated rendering utilizes an eye tracker embedded in a Virtual Reality headset to produce images with progressively less detail in the peripheral vision located outside the zone gazed by the fovea, based on eye tracking input. By doing that, the computational cost of rendering is reduced without any noticeable change of image quality. The ray-tracing algorithm produces superior rendering quality compared to rasterization, however, its computational cost is high because of intense ray intersection calculations with the 3D scene. Real-time ray-tracing is challenging. We propose a ray-tracing rendering pipeline for which we apply foveated rendering so that a higher frame rate without perceived reduction in quality.

This is achieved by using Nvidia newest generation of graphic process unit card, the RTX 2060, and OptiX software to have the highest available performance for ray tracing. Arrington Research’s Viewpoint EyeTracker embedded in the NVIS SX111 VR Head Mounted Display monitored gaze position in real-time. In our work, we take advantage of the eye-tracking capabilities of our HMD to separate the center of the user’s field of view from the periphery. We then reduce the sampling done using ray tracing in the periphery, taking samples for only a smaller amount of pixels. Next, we duplicate neighboring pixels to cover all blank spots using the information of our closest neighbors. Finally, we use Gaussian blurring to cover all the imperfections in the recreated frame.

Evaluations and user tests show that our method achieves real-time frame rates, while visual differences compared to fully rendered images are hardly perceivable. Our approach, therefore, achieves foveated real-time ray-tracing as displayed in a binocularly eye-tracked Head Mounted Display, for complex 3D scenes, without any perceived visual differences compared to full-scale ray-tracing. Such techniques could be applied for gaze-based interaction in 3D scenes displayed in developed software for Virtual or Augmented Reality applications. Testing such techniques is demonstrated in scenes which represent 3D reconstructions of cultural heritage sites aiming to pursue their scientific documentation in Virtual or Augmented Reality applications.