Motion Capture Generation from Videos Through Neural Networks

Kyriakos Christodoulidis

Type

Thesis

Supervisor

Katerina Mania

Research Domain

• Machine Learning

• Motion Capture

Abstract

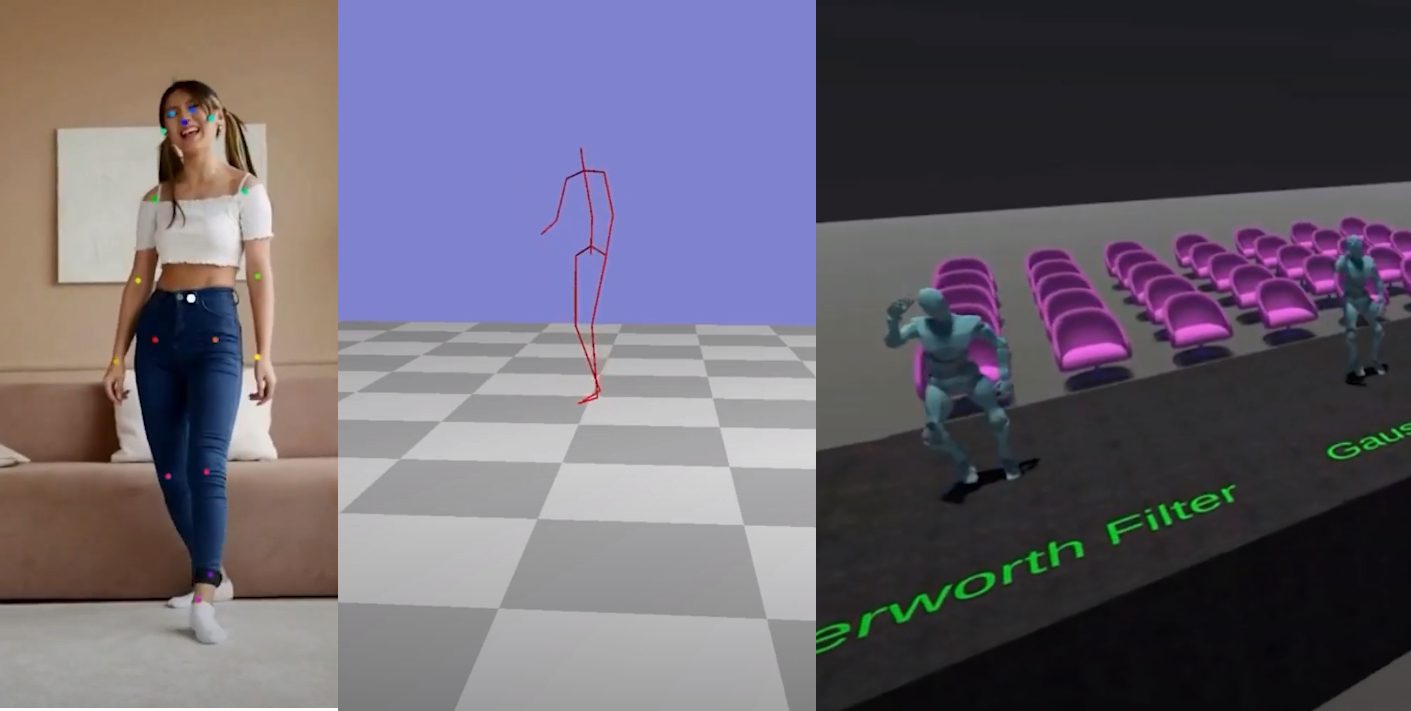

Motion capture methods are either very expensive to acquire or of poor quality.Thus, we propose an innovative method that will give access to everyone who has anabove-average computer, to generate for free their single-person digital motion clips.Recently, many researchers try to use neural networks that estimate the 3D humanpose from a single video. In our approach, we decided to use three different well-knownpre-trained models, the first two to find the 2D pose estimation from each frame of thevideo, and the other to convert these 2D poses into 3D poses. Then, we estimated theposition of the human per frame, by calculating the depth of the person in the image.The combination of the 3D poses and the position consist the motion data that wewanted to find. Then, by importing these data into a Skeleton that contains all theestimated bones, we can create a Bio-vision Hierarchy (BVH) file, that can be importedinto the 3D computer graphics software tool-set. At this point, the generated BVH filecontains noise from the neural networks, so we propose using some filters to removethis noise without affecting significantly the motion data information. Furthermore,we converted the raw python code into a Windows Application to create a friendlyuser environment. Finally, we created some functions within this application so thatthe user can visually, process and edit the results from the BVH files.